Sam Hughes speaks to Chance Thomas about working on The VOID, an incredible new experience that combines the use of real effects, Augmented Reality and Virtual Reality.

Chance Thomas is an American composer, educator and entrepreneur. His music has underscored blockbuster commercial success and critical acclaim, including an Oscar™, an Emmy™ and billions of dollars in video game and film sales worldwide. Game credits include DOTA 2, Lord of the Rings Online, James Cameron’s Avator, Heroes of Might and Magic, Peter Jackson’s King Kong and many more. Outside of gaming, his music can be heard on hit television shows like The Bachelorette, Pawn Stars, Lost Treasure Hunt and others.

Chance is the author of a new university textbook, Composing Music For Games, published by CRC International Press. Composing Music for Games is the world’s most authoritative and comprehensive guide for launching and maintaining a successful career as a video game composer. As an educator, Chance works with universities, colleges and conferences to help students navigate the intersection of music scoring, technology and business. He has served on advisory boards for Brigham Young University, Full Sail University, the Game Developer’s Conference, the Academy of Interactive Arts and Sciences, and the Game Audio Network Guild.

Welcome back Chance, it’s great to have you with us again! How are you?

Hey Sam! I’m good!

So let’s talk about The VOID! I for one, am very excited about this experience. For the benefit of our readers can you tell us what The Void actually as and how it differs from other AR and VR experiences?

The VOID offers a tactile virtual reality experience, so that you can touch, pick up, walk around in, stand on, sit on, pull the trigger and otherwise interact with the amazing things you are seeing and hearing inside the VR helmet. The designers of the experience first build a physical stage, complete with props like torches, guns, lifts, instrument panels, benches, etc., and then create their virtual experiences which overlay on top of the physical stage in exact proportion.

It’s a location-based experience. Some are calling The VOID a virtual reality theme park. One easy way to envision it is to imagine a laser tag facility that is upgraded to utilize virtual reality. But in this case, you are fighting monsters, aliens, ghosts or whatever adversaries inhabit the particular fiction the experience is built around. This of course involves sophisticated tracking so that the software is continually updated on your physical location within the experience.

How did you first get involved in the project?

I was attending an industry networking event, and two of the founders made a presentation describing their vision. Then they showed a concept video and I was even more intrigued.

I actually doubted that they could pull it off, but introduced myself anyway. They offered to give me a preview of their prototype experience at their corporate headquarters. We planned a date, I showed up and went through their first two prototypes. Incredible. I felt like I had stepped 20 years into the future. It was the single most exciting thing I had ever experienced in entertainment.

The sound in the prototypes wasn’t very good, so I offered my services. They needed a contractor who could tackle all aspects of audio development and implementation at an exceptional level. They contracted with my audio services company, HUGEsound, to take on the job.

There was a TED conference project recently, tell us more about that and your role in the project?

The TED conference exhibit was The VOID’s public debut and they really wanted to get it right. We built an Indiana Jones experience. It was fantastic. You explore the ruins of an ancient temple, carry a torch, fight a sea monster, ride a rickety elevator, solve mechanical and cosmic puzzles, have spiders crawl all over you, catalyse the collapse of a massive stone temple around you, and fall through the broken floor to your near-death. So awesome.

My role was to design, produce and oversee implementation of all aspects of audio for the Ted experience.

How did that go and what kind of responses were you getting?

I loved it. And so did Harrison Ford. And Steven Spielberg. And all the other movers and shakers who attended the conference and went through the experience. It was a huge hit.

Let’s talk more about your HUGEsound team? How many of there are you and how do you work together?

Terrific team. Michael McDonough (Star Trek, WIldstar) on sound effects. Brittani Bateman on VO. Tanner Danielson on audio engineering. I handled audio design and editing, music scoring, admin, and making sure the implementation was right. I also got involved in the headphone design for their VR helmet. (pictured left to right, Michael, Brittani, Tanner)

What kind of hardware and software are you guys working with?

Top shelf stuff. Absolutely high end. The VOID is a destination experience. They don’t need to sell hardware to consumers. So they can afford to pull out the stops. Here is an example of the headphone specs:

Driver Size: 40 mm

Transducer Type: Dynamic, Neodymium magnet

Frequency Range: 5 Hz – 25,000 Hz

Sensitivity (@1 kHz): 102 dB/mW

Impedence (@ 1 kHz): 44 Ohms

Max Input Power: 1000 mW

The specs for the goggles are even better. Dual curved OLED displays, 2K per-eye resolution, 180 degree field of view, stereo overlap of 53 degrees… it’s pretty impressive!

All the software was developed in Unity and we utilized the 3Dception audio plug-in.

You must have an interesting time with Sound design and the 3D / pseudo-binaural implementation of sfx?

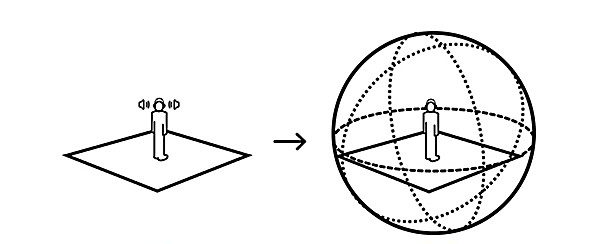

Yes! This was one of the more interesting challenges to solve. The goal was to convey the illusion that people going through The VOID experience were fully immersed in a sphere of audio. Tough to pull off, even with the high end math of 3Dception. So I settled on a combination of approaches to sell the illusion.

Some things we implemented right into Unity, creating sound objects that would retain their position even as people swung their heads around. This was good for sounds emanating from a fixed position in the world, such as the grinding shut of a stone door, or the tightening of vines on an old elevator pulley system.

Other sounds were put into 3Dception, hoping it’s algorithms would do the heavy lifting. This worked really well for the torch that you hold as you explore the caves. As you swing the torch from left to right. The sound goes with it, matching its position from your ear(s) quite effectively.

Finally, we pre-rendered some sfx mixes in Pro Tools. This was great for explosions and chaotic scenes where lots of stuff is happening all around you. Perfectly accurate positioning was not as important for those moments as making the moment sound awesome and feel overwhelming.

What about the music composition process, what was your methodology there?

Music presented me with two big questions. First, where to use music in the experience and second, how to implement it? Of course there were places where I wanted to heighten the emotion, such as when you fight the sea monster. For those spots, I just approached it like an action score. Big orchestra, dissonances, large vertical leaps and dynamic color contrasts in the parts, rapid pace – all the stuff needed to make the mind release a nice fight-or-flight hormone cocktail.

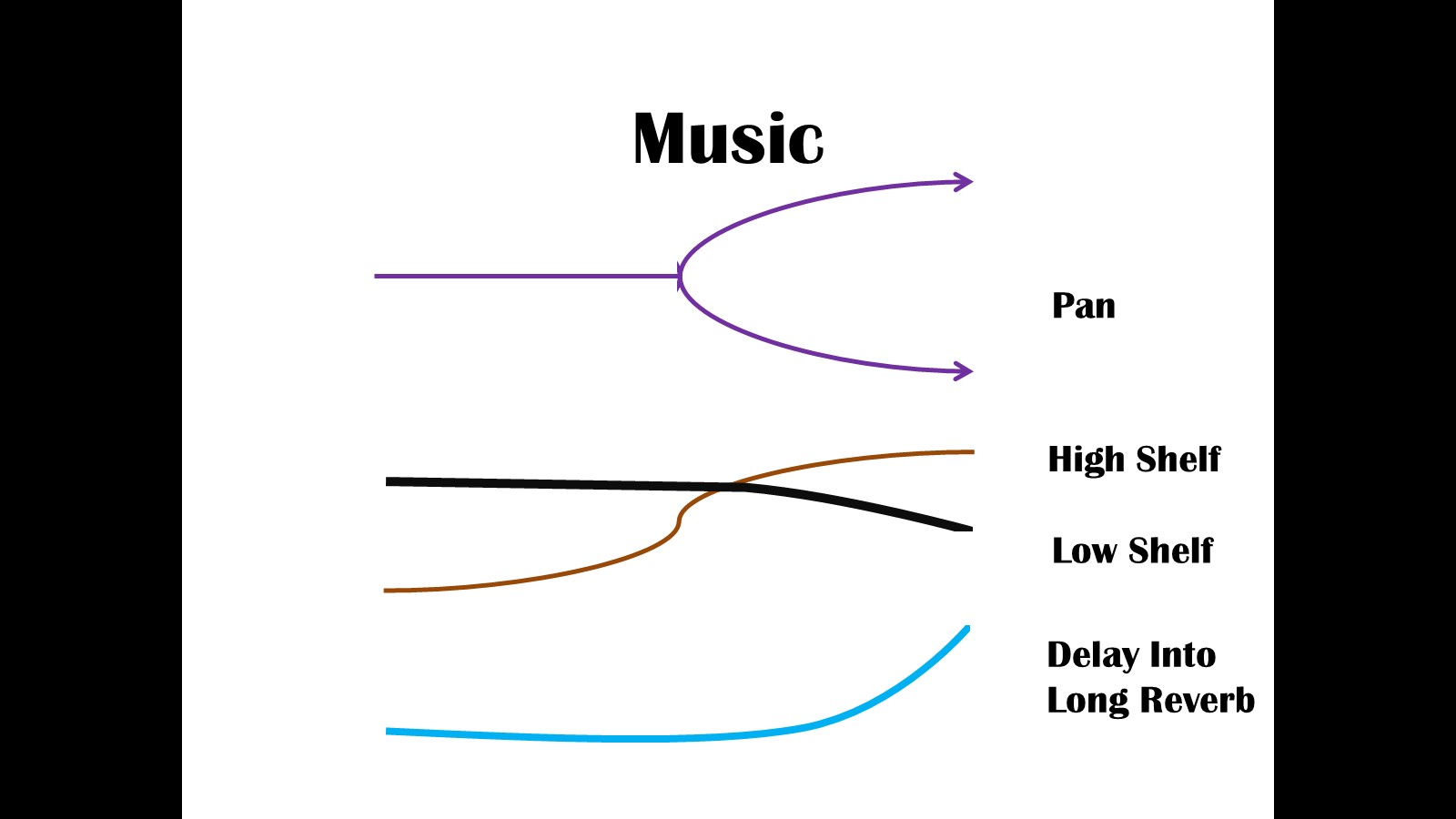

But there were other places where I wanted the music to communicate something much more subtle. Let me give you an example. Near the beginning of the experience, you stumble onto an ancient throne room. You see the throne in your VR helmet, and you can reach out and touch it. Heck, you can even sit on it if you want to. In fact, you HAVE to sit on the throne to progress through the experience. Sitting down becomes critically important later in the experience, so we needed people to make the connection that they can actually sit down on something they see in their goggles, and also help them understand subliminally that it’s a desirable thing. So I decided to add a nice musical cadence there. But I also wanted to do something very subtle. I wanted to convey the idea that what they were experiencing internally was real, connected to the actual world outside them. So I implemented the music in such a way that it starts low and localized inside of you, then grows to fill your awareness at the cadence point, then branches outside of you and into the world beyond.

The chart below is a loose representation of the implementation processes employed to achieve this. The music begins in mono, fading in, dry, with all the high frequencies rolled off. As the music comes to its cadence, it swells into stereo, the higher frequencies come to life and a small amount of reverb is added. Then, after the cadence, as the sustained chord slowly fades, we start to roll off the low frequencies and attenuate the dry signal while simultaneously sending the mix into a L-R delay with all the return going into a deep reverb (400 millisecond pre-delay and 5 second decay).

The net result is it feels as if the music is welling up from deep within your torso, almost imperceptibly at first, then rises into your head, and takes over your awareness for a moment. After that, it gently recedes and seems to float away from you, outside of your head and out into the experience itself. It was pretty cool.

What’s your approach with dialog recording and implementation?

Work with great talent, make sure the script writer (or other vision holder) is there, and get clean recordings. Organization is important too with your script and your session, so you can keep track of lines, takes, rewrites, etc. and not get confused when you get back to your studio for editing.

For the TED experience, I added processing to simulate the feel of radio communication circa 1940. Crackles, pops, EQ, some modulated distortion, that kind of thing.

So what do we have to look forward to next?

Coming on July 1, 2016… to Times Square in New York City. The VOID will unveil its brand new Ghostbusters experience to the general public. Sam, you’ve got to grab a plane ticket and come over and check it out! And yes, it’s much better and lots more fun than the Ghostbusters movie trailer, I might add… ;).

Very exciting stuff, I’ll definitely be planning a trip sometime! We can’t wait to hear more!

LINKS

Chance Thomas

The VOID

We hope you enjoyed the interview, feel free to check out more of these at the Interviews page. Also, don’t forget to sign up to our Monthly Newsletter to make sure you don’t miss anything!

If you’re feeling generous there’s also our Patreon page, where you can get EARLY ACCESS to these interviews before they go live on the site.

We appreciate all the support!

The Sound Architect