Sam Hughes spoke to Garry Taylor and Simon Gumbleton about working with audio on the PSVR!

Garry Taylor (Audio Director) and Simon Gumbleton (Technical Sound Designer)

Sony Interactive Entertainment Europe, Worldwide Studios, Creative Services Group

Thanks for joining us! It’s a pleasure to have you all on the site.

GT: Thanks for asking us!

First of all, how long have you been working with and experimenting with the PlayStation VR technology?

GT: We’ve been working with VR tech for over 3 years now. Our group (Sony Interactive Entertainment Europe Worldwide Studios Creative Services Group) primarily creates content for first party titles, but we also collaborate with other teams and R&D groups within the business, as well as on the design of the audio systems that are part of PlayStation VR (PS VR).

For any platform, it’s vitally important that the hardware allows game developers to achieve what they want to achieve easily, so close collaboration between our audio engineering and design teams, (as well as the other audio focused teams within the company) is very important.

SG: We started working on software for the platform in the very early stages. Just as prototype units started to be distributed outside of R&D.

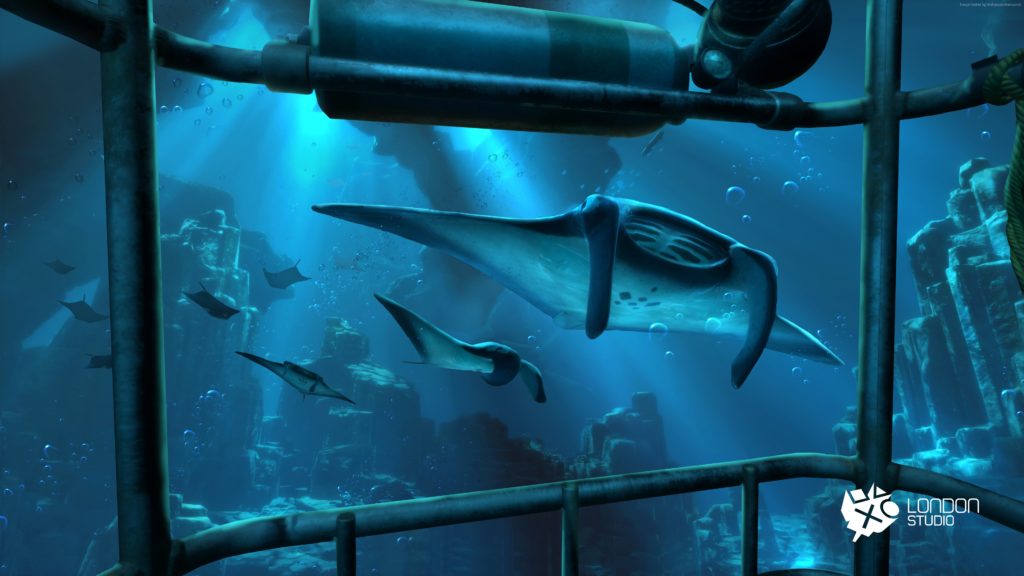

In that time we’ve built a lot of prototypes, some of which have gone on to form the foundations of launch titles (such as VR Worlds).

What have been some innovative features you’ve been able to implement in VR Worlds and other upcoming titles?

SG: One interesting feature we developed in VR Worlds was what we call the “Focus System”.

Because in VR the player is the camera, they have full control. We realised we could exploit this new input data in cool ways. Essentially the focus system mimics something called ‘the cocktail party effect’, where a listener can have this perception of tuning into something in their sound world and focusing on it.

We can use data about what the player is looking at to subtly change the mix, and bring certain elements more clearly into focus.

Another interesting thing about VR is just how real and tactile the world can feel. For example getting super close to a character while they are shouting at you, or directly interacting with physical objects. Because these sensations are heightened so much in VR, we did a lot of work to make the audio incredibly dynamic and responsive.

Sounds for these objects have lots of layers of detail that change and react to parameter variations such as velocity, proximity, impact force and others to really sell that 1-to-1 experience. This is an example where we’ve taken traditional techniques to the next level with PS VR.

GT: The ability to use the microphone as an input device to influence the world within the game or experience has also been significant.

The microphone is on the HMD (Head Mounted Display), close to the player’s mouth, so it’s easy to analyse the microphone input in real-time and use that data to modify or influence anything in the world. This opens up a huge amount of exciting possibilities depending on the type of title we’re working on.

How does the rendering of the 3D audio work?

GT: A lot of the audio processing is done on the Processing Unit, which the PS VR headset plugs into.

This means the 3D Audio system doesn’t tax the PS4 in terms of resources. It also handles the Head-Related Transfer Function (HRTF) processing which applies the relevant delays and filtering to ensure that each sound source appears to come from the right direction, in relation to the player’s head position. This frees the PS4’s power to be used by sound designers and composers for their own creative ends, as opposed to having to process everything to make it work for VR.

SG: The game engine has information about each sound that gets played in the world, information like position and orientation.

This information is passed through to the 3D audio renderer along with the actual audio stream to be rendered. At this point, the 3D audio system can take the audio stream, and apply processing so that it emulates the position specific response for each ear, that would be created by the head and ears of the listener. So essentially, we can tell each ear what this sound would be like if it was really coming from this position.

What have been some of the major lessons you’ve learnt from working with VR audio?

SG: Believability is key I think. Understanding how to construct worlds that match the expectation of the player, and how audio can both help and hinder that goal.

There is this idea in VR of the fidelity contract, which sets up the expectations of the player in a virtual space. For me, audio is one of the key things that can enhance that fidelity contract.

In experiences like those in VR Worlds, there are many opportunities for us to make the world more believable, and it’s important to recognise those situations and construct the audio appropriately.

Sometimes that means lots and lots of detail in an interaction, sometimes it’s making sure that the audio reacts to a player action or input (for example sticking your head out the window of a speeding van, and hearing the wind rush into each ear as you turn your head around).

GT: In terms of believability, dynamic range is a lot more critical in VR than when playing screen based titles. Dialogue especially. If you want people to believe they’re in a specific environment, having lots of compression on dialogue for example becomes very jarring because that isn’t how dialogue sounds in the real world. When recording dialogue with a close mic you get a very intimate recording….it sounds close. However, if you get that intimate sound from a character that’s far away it sounds very unnatural and your brain instantly flags it up as incorrect.

Recording perspective is very important. We’ve had to change the way we record certain content. Getting in wrong would break the sense of presence immediately.

As Simon said, VR requires a greater amount of detail to be considered. It allows people to interact with the world in a different way, including getting really close to objects, and when they do, they expect to hear all the detail they can’t hear from a distance. This involves using distance attenuation for certain elements that fall off over very small distances, sometimes as little as 10 cm.

What are some of the key misconceptions you think people have of VR audio?

SG: One misconception is perhaps the idea that there are just one set of “rules” for audio in VR.

Different experiences require different approaches, just like in non-VR games. For me, what’s most important is having an understanding of all the rules, techniques and approaches and knowing why you should choose A over B, and subsequently what you will gain or lose in each case.

GT: I’m not sure if it’s a misconception, but one key thing for me is the importance of audio’s role in reinforcing the sense of presence developers are striving for in VR. Without convincing audio design there is no sense of presence so its importance should never be underestimated.

Presence relies on the believability of the audio experience. Not necessarily accuracy but believability. VR effectively gives content creators a mainline into the player’s psyche and can affect a player at a primal level that’s just not possible watching a screen. Audio design will either support that sense of presence or destroy it. It’s that critical.

If you could anyone any advice before they work with VR Audio what would it be?

SG: Take nothing for granted!

Play lots of VR and non-VR games and try to understand why some things work well and some things don’t.

Don’t be afraid to experiment and get lots of people to try it. The feeling you have actually in the headset can sometimes be very different to hearing the same thing looking at a flat screen.

And lastly but perhaps most importantly…invest in your tools!

What do you believe the potential of VR audio is?

GT: It’s early days. It’s a very new technology and designers and engineers (us included) are still finding our feet and discovering what works and what doesn’t, from a creative point of view.

It’s an exciting time!

Incremental increases in processing power available to audio teams generally mean there’s lots of scope for gains to be made in terms of acoustic environment modelling and DSP techniques. As the amount of different types of data that enter any system increase (in the case of VR; sound through the microphone, head orientation and position data, and spatial controller data), people will find different ways to combine and process that data which will lead to further innovation.

As for development itself, finding workflows within VR that allow designers to stop having to jump between designing on a screen and jumping into VR to check their work will also make things easier for developers to create, implement and check content faster.

We also had a couple of questions from Twitter as well so here are some bonus Q’s!:

Is Loudness going to be more/less/same importance w/ VR as opposed to prev generations given headphones will be the norm? Currently loudness is more of a recommendation on PS4 than a mandate, making some games quite a bit louder than enjoyable – @JayMFernandes

GT: Hi Jay. I believe that loudness is even more important in VR than screen-based systems, as the louder your content is the less dynamic range you have to play with, and presence is, to a certain degree, reliant on dynamics.

It’s up to tools and middleware developers, as well as HMD manufacturers, to ensure they promote good audio engineering practice by giving developers the tools they need to manage and measure loudness and dynamic range effectively.

Have you dealt with encouraging/assisting player navigation with #VRAudio ? If so, what interesting things have you learned? – @rbsounddesign

SG: Hello Rob. With much higher positional resolution in 3D audio systems, we can use audio cues very effectively to guide the players focus. For example in The London Heist, there is an exit door with some sparking lights above it. This audio cue guides the players focus to the door, reinforcing the narrative that they are trapped.

We can also do more subtle things with the mix to help orientate players. For example, our recent titles, the music is positional but the mix is weighted heavily towards the front. This helps the player understand where “forward” is, even when there are no visuals, ensuring that when the next scene starts they are orientated correctly.

Thanks very much Garry Taylor and Simon Gumbleton for talking to us, lots of interesting topics around VR Audio!

LINKS

Official

One thought on “Playstation VR Interview with Garry Taylor and Simon Gumbleton”