Article by Matthew Miller

Edited by Alyx Jones

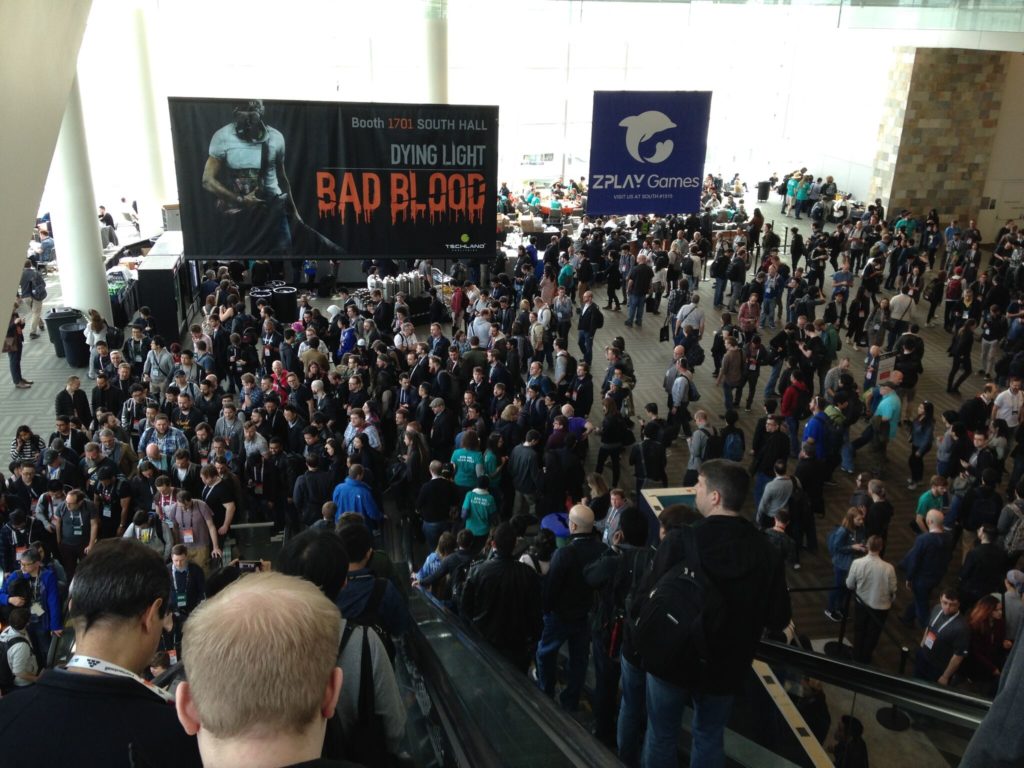

GDC 2018 has now come and gone. This year the conference celebrated a record attendance of 28,000 attendees at The Moscone Centre, San Francisco, across many disciplines, from programming to game design, but most importantly for us: audio! 2019 has already been announced, so time to start saving! This was my fourth time making the annual pilgrimage to San Francisco, but my first GDC to have an All Access Pass, allowing me to explore a number of disciplines within the industry. My first GDC was back in 2015, and although I’d been working in audio for many years, I was brand new to the industry and eager to learn all that I could about game audio. Now that I’m working in the industry, I was feeling compelled to use this trip to thank the many folks who have been so welcoming and supportive of me since the beginning, and also bring that same spirit of generosity to welcome those who were making their way to the conference for the first time.

I kept a day-by-day blog of my time at GDC 2018 and hope that you can take away some gems from my roundup, even if you couldn’t make it personally…or maybe you just want to relive the amazing time you had!

[showhide type=”post1″ more_text=”Click here to show Mondays Highlights” less_text=”Hide Monday Highlights”]

Day 1 – Monday, March 19

7:00 to 9:00 AM

#coffeeofgameaudio

Every morning during GDC, 7 to 9 AM, Monday through Friday, means one thing for game audio people: the ritual meetup at Sight Glass Coffee. The coffee hang was started six years ago by Damian Kastbauer and Anton Woltek, as a way to encourage veterans and newcomers alike to get to know each other and share their GDC experience. At around 7:30 attention is focused on a round table chat where all those who’ve gathered have an open discussion about the highlights of the previous day and what they’re most excited about for the day ahead.I can think of no better way to immerse oneself in the game audio culture.

Oh, and the coffee is AMAZING! For me it’s one of the featured pour-over coffees and a slice of vegan chocolate cake, please.

10:00 AM to 11:00 AM – VRDC

Talk 1 – ‘Carne y Arena’ (Flesh and Sand): Uncompromising Audio for Narrative VR

‘Carne y Arena’ (Flesh and Sand): Uncompromising Audio for Narrative VR was my first talk of the day, and one of my most anticipated sessions of the entire conference. The panel of speakers included audio designers Bill Rudolph and Kevin Bolen, along with sound editor and re-recording mixer, Leff Lefferts, all from Skywalker Sound, and Technical Sound Designer, Damian Kastbauer.

‘Carne Y Arena’ is a 6 ½ minute Virtual Reality installation that “explores the human condition of immigrants and refugees.” The installation is presented in a 60×60’ space, where sand has been trucked in to further immerse users in the experience.

The talk was centred around the challenge of creating a dynamic mix on headphones that would rival a theatrical mix for a film. Director Alejandro Inarritu (Birdman, The Revenant), would accept no compromise of his artistic vision.

After numerous iterations of the sound mix, the audio team began to realize that trying to “simulate the real” kept missing the mark. The team needed to bridge the gap between a guest’s emergent VR experience and the scripted narrative essential to tell the story. In other words, the challenge was to balance the cinematic language of film with the realistic simulations of a video game.

A turning point came when they did a mix on a traditional soundstage, which brought the narrative elements into focus, but that begged the question: how do you get that into what the team referred to as the “Infernal Machine”, that is, using headphones and a VR headset?

The answer came when the team realized that they should treat elements differently, based on their purpose in the experience, as opposed to simulating the real. This meant that some elements, such as essential dialogue, could be presented in 2D, while other elements, such as the ominous flyby of a helicopter, could be spatialized binaurally, an experience made even more visceral by the use of subwoofers in the presentation space.

In the end, making the “intent of the experience” more important than the playback technology won the day.

11:20 AM to 12:00 PM – Game Narrative Summit

Talk 2 – Making Them Care: The Narrative Burden for Creating Empathy

Making Them Care: The Narrative Burden of Creating Empathy was the first GDC talk I attended outside of audio. It was packed with like-minded people wanting to incorporate a healthier set of values into their games. The presenter, Heidi McDonald, is the Senior Creative Director of iThrive Games, an organization that seeks to promote the development of social and emotional learning in games. This learning is focused on working with teens but is valuable to people of all ages.

iThrive Games uses a wide range of social tools, from think tanks, workshops and game jams, to working with universities in order to pull together collective experience and research science that will put empathy at the center of our game design.

Heidi used her own life experience to open up about games that affected her, along with a number of other examples where games have done empathy right.

The most basic advice for developers is to use visual, audio and narrative clues that support the emotions of the players and NPC characters, such as the use of blue lighting to convey sadness, or the bowed heads of NPCs.

You can download the Empathy Toolkit and other development tools here .

Talk 3 – 3:00 PM to 3:30 PM

‘A Mortician’s Tale’: A Different View on How Games Treat Death

Gabby DaRienzo, co-founder of Laundry Bear Games, gave a mostly serious but often times humorous talk about death in video games, how video games helped her deal with her own death anxiety experiences, and how her own game, ‘A Mortician’s Tale’, looks at death in an empathetic and positive way.

‘A Mortician’s Tale’ is a narrative game where you are tasked with the duties of an actual mortician. This includes preparing bodies for burial and cremation, attending the funerals of the deceased, as well as some business elements of going through the mortician’s emails that include interactions with her boss, co-workers and clients.

The game sought to strike a balance between accuracy and comfort. Characters were designed with larger heads and big eyes to avoid uncanny valley, and a muted purple palette created an environment of calm. When interacting with the bereaved in the funeral home, you don’t tell people how to grieve, but instead, you listen.

Gabby says, “When you kill a zombie in a game, the body disappears and you no longer think about it. In ‘A Mortician’s Tale’, you spend a lot of time with dead bodies, as you prepare them for cremation or burial. You are asked to take care of a body of a person that you don’t know (or love), but show them the respect and dignity anyone deserves.”

Gabby in no way frowns on more traditional death mechanics, but does ask us to consider what is truly right for our games, and making better games, and telling better stories is why we’re here.

Monday Night

Monday Night I found myself at the annual VGM Wine Bar event, at Terroir, a neighborhood establishment, not too far from the conference. There was a terrific bluegrass band, Fog Holler, playing, and the place was packed. I got myself a glass of dry red wine, and while snaking my way through the crowd I recognized sound artist and musician, Nathan Moody. I’m a fan of Nathan’s work and had just recently watched an extensive interview with him on DivKid Video’s YouTube channel. I introduced myself, and we immediately fell into a lengthy conversation on modular synthesizers, sound installations and home-made instruments. What a treat.

[/showhide]

[showhide type=”post2″ more_text=”Click here to show Tuesdays Highlights” less_text=”Hide Tuesday Highlights”]

DAY 2 – Tuesday, March 20

Audio Bootcamp XVII

Organizers Scott Selfon and Damian Kastbauer ushered in this year’s Audio Bootcamp by giving a rundown of the day’s events, a few simple guidelines on how we should respect one another, and encouraging folks to check out talks from other disciplines within the game industry.

10:00 AM to 11:00 AM

Talk 1 – A Composer’s Guide To The Galaxy

Elvira Bjorkman (Composer, Two Feathers Studios) opened up this year’s Audio Bootcamp with her philosophy on composing music for games, with her pronouncement “Let’s Talk About Glasses”. By glasses she meant using pretend ‘lenses’ to allow us to look at our composition process with greater objectivity. Each stage of the production process requires us to put on different set of ‘Pretend Glasses’. For example, wear the ‘lens of the player’ during the pre-production process and play the game before you even start thinking about the music. The ‘lens of delight’ is about emotion – both happy and sad are delightful, because they make us feel something. Delight is the opposite of boring.

There were a couple of takeaways that stuck with me and others that I talked to:

- Try to write the shortest loop possible, without it being boring. “A pattern mastered by the player is a boring pattern.”

- Find the balance between being compelling while also staying in the background

- Elvira played a short video with an intro to a level in the game ‘Aragami’, while posing the question, “When should music start in the game?”. In this particular case, the music didn’t start until exploration began.

- Loops are a good way of keeping the player immersed in gameplay, and you can use transitions as an effective way to add surprise

Talk 2 – 11:20 AM – 12:20 PM

Part 1 – Programming Composers and Composing Programmers

Victoria Dorn (Sony Interactive Entertainment) gave a compelling, high level talk on how and why audio folks should get some programming experience under their belts. For one thing, in today’s competitive environment, the more abilities you can bring to the table, the better. It’s no longer enough to just be the composer or sound designer, you also need to have a deep understanding of middleware and be able to, at minimum, share a common vocabulary with programmers, so that they can implement your work most effectively in the game.

The three basic steps you can take to be on your way is to:

- Learn the fundamentals of programming

- Learn pseudocode, a way to use common words to describe a solution to a programming problem

- Learn scripting, like C#, Python or LUA

Here are a few resources:

Part 2 – Adding Punch To Your Sound

Gina Zdanowicz (Serial Lab Studios) showed us how to (you guessed it), add more punch to our music and sound design, and ensure that our most import design elements cut through the mix. For sound effects, Gina suggests using sounds with a fast attack, and using a transient designer plugin to help shape the contour of your sounds so that they cut through. She demonstrated the virtues of this lesser-known processing tool, by shaping the attack and decay elements of a number of weapons. Adding punch to your music starts with some arrangement decisions, such as using octave duplication for certain instruments, along with choosing the appropriate instrumentation for cutting through a mix.

12:20pm – 1:50pm

Lunchtime Surgeries

After an obligatory “no, not that kind of surgery” quip, you are invited to grab some lunch and meet in an adjacent room to chat with that morning’s presenters. The presenters move from table to table over the course of the lunch, allowing you to spend quality time with each one, and ask questions you didn’t get to ask during the talks.

Talk 4 – 2:10pm – 2:40pm

Reel Talk

Kevin Regamey (Power Up Audio) and Matthew Marteinsson (Klei Entertainment) brought their popular Twitch stream, Reel Talk, to Audio Bootcamp, where they offered up best practices for up-and-coming game audio professionals, and putting together a reel and web presence that will grab the attention of prospective employers. These two don’t pull any punches, and I would say that their honest, constructive critiques have raised the bar for everyone in the industry.

Talk 5 – 3:00pm – 3:30pm

Talking About Talking: Recording and Producing Well Crafted Dialogue

Amanda Rose Smith is an independent dialogue recordist and editor, who works across multiple disciplines. In this talk she explained why certain mics were better for different types of performances. For example, she suggests using a shotgun mic for louder performances, because the tight polar pattern allows the performer to move further away from the microphone, without introducing additional room ambience. By contrast, large diaphragm mics are better suited for more nuanced and intimate dialogue.

She stressed the value of coming up with a file-naming convention before recording begins, and finding a way to streamline the labelling process.

A new concept for me was to use a destructive, single track editor because it’s much quicker than editing in the DAW. It’s always good to use a copy of the original files, but editing destructively allows you to to open one file at a time, make your edits, save and close. The process of editing, consolidating and naming a file in the DAW introduces a number of opportunities for failure. If a file is accidentally mislabelled, it won’t get triggered in the game.

Amanda is not a fan of automating the mastering process, where you target each file to the same RMS level. This is because each voice has its own unique character when it comes to energy and frequency range. Another consideration is how the inclusion of silence in the RMS calculations skews the average levels we’re targeting. Metering standards, like LUFS, are meant for full mixes, not single sound events like dialogue, which have lots of negative space in them.

Talk – 6 – 5:30pm – 6:00pm

Zen and the Art of Game Audio Maintenance

And with the strike of a Tibetan bowl, Anton Woldhek, principal sound designer at Guerrilla Games, began to explain how the influential book “Zen and the Art of Motorcycle Maintenance” tackles our relationship with technology. In game audio, we love technology because it allows us to realize our creative vision, and we cherish or covet our favourite software and hardware as if they were religious artifacts. But we also hate our technology when it doesn’t work properly or fails to deliver on our expectations.

We are all trying to find balance between the three ambitions in the “Game Dev Triangle”: staying on top of cutting edge technology, realizing our own creative aspirations, and satisfying the need to sustain ourselves and our families financially. Where you find yourself within that triangle may be different from where your fellow collaborators find themselves, and so company culture becomes an important factor in whether or not you can position yourself where you want to be in the triangle.

Using the analogy of an ocean wave to represent the game development process, where we go from the pre-production (pre-wave), to production (crest of the wave), to finishing the game, Anton laid out how we can create processes that support us through the devcycle. From calibrating our audio system to implementing debug tools to having colleagues we can rely on at each stage of development. These tools and best practices allow us to tackle, head on, the inevitable chaos and uncertainty we face while making games.

Tuesday Night

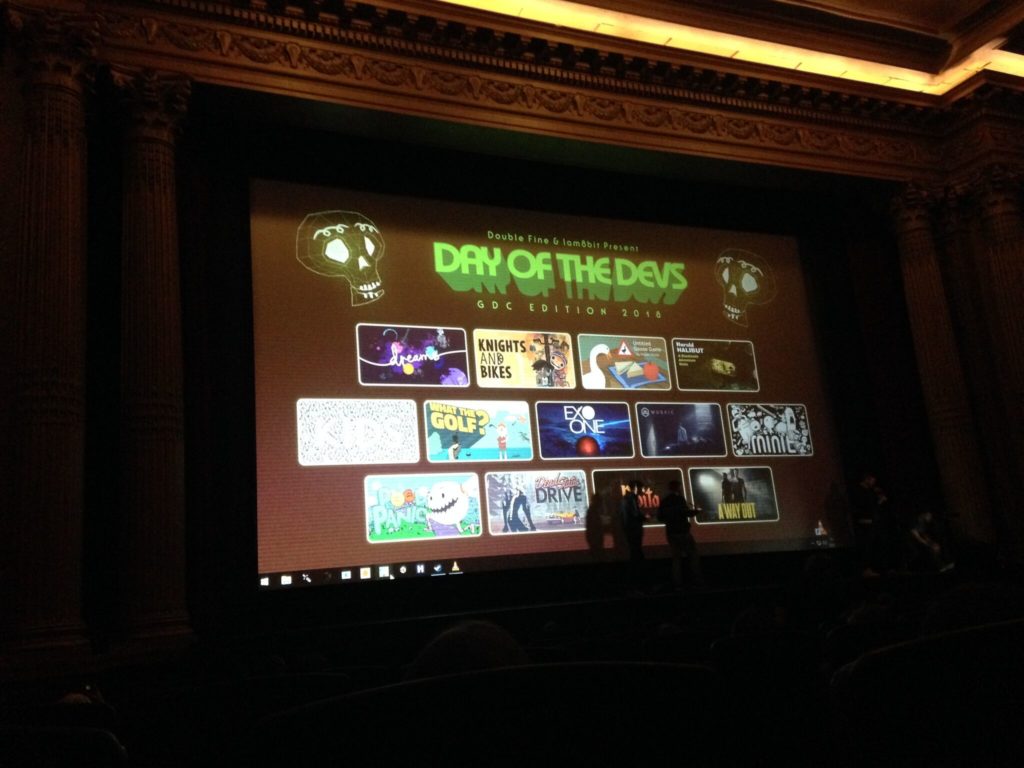

Tuesday night was quite a trip. I was invited along with a number of my Toronto colleagues to the annual Day of the Devs showcase, held at the Alamo Drafthouse, in the Mission District. The showcase features independent games that are shown at the Day of the Devs Lounge at GDC. The Alamo Drafthouse venue is a funky old movie theater that has been transformed into a sprawling entertainment complex. The showcase took place inside the theatre, where there are wandering waiters to take your food and drink orders. I enjoyed a refreshing IPA and some nachos while the indy devs demoed their games on the massive screen.

[/showhide]

[showhide type=”post3″ more_text=”Click here to show Wednesdays Highlights” less_text=”Hide Wednesday Highlights”]

DAY 3 – Wednesday, March 21

Wednesday, after morning coffee, I had some business to attend to so I missed a couple of great talks, including Wilbert Roget II’s “A Modern Take on Historical Fiction: Music for ‘Call of Duty: WWII’ ”, Robert Rice’s “The Demonic Sound of ‘Paranormal Activity: The Lost Soul’ “, as well as a micro talk on “What’s Next?” in game audio.

Talk 1 – 12:00pm – 12:30pm

A Matter of Music Design – Driving game-play with music

Eric Hamel, composer and sound designer for Boston-based game developer, Worthing & Moncrief, took us through the process of what he referred to as “Music Design”, using game data and adaptive music to support the core game mechanics and provide game-relevant information to the player.

Using the game ‘A Matter Of Murder’ as an example, Eric explains how the game sent data to the vertical layered music system in Wwise to provide the player with hints on whether they were onto a clue or not.

In ‘Austen Translation’, you are tasked with finding the best suitor, while also trying to make self-improvements to your own wit and charm. In this case, horizontal resequencing along with MIDi in Wwise were used to switch melodies from major to minor, based on player progress in the game.

Talk 2 – 3:30pm – 4:30pm

Dynamic Dialogue: The Game Designer’s Guide To Voice Recording

Senior Casting and Voice Director, Andrea Toyias, was joined by her fellow Blizzard colleagues, Senior Narrative Designer Steve Danuser and Lead Writer Justin Dye, to take an in-depth look at the best practices to prep for and execute a successful dialogue recording session.

The Holy Trinity of Voice Recording

The talk started by defining the roles of the players essential to the dialogue recording process. These key players are the Designer, the Director and the Actor.

The Designer is the ‘Keeper of the World’. They know where and how dialogue will be used in the game, and are responsible for ensuring that the dialogue remains in context.

The Director is the ‘Keeper of the Performance’. Their goal is to give voice direction to the actor, provide constructive but critical feedback, and help the actor deliver the best performance possible.

The Actor is the ‘Keeper of Character’. It is their job to bring the character(s) to life, with spirit and emotion.

It was stressed early on in the talk that all the parts of the holy trinity are essential for every team, regardless of size.

Here are a few takeaways:

- When prepping for a session

- Know the function the character serves in the game

- The amount of expression should relate to how often a line will appear. If heard too often, an expressive line will feel repetitive.

- Know your hard and soft emotional beats

- Soft beats allow you to build relationship with characters

- All NPCs are characters

- What to Bring to the Session:

- Bring as many crayons as possible for more colors and richer pictures

- The actor’s initial audition (if available) is a reminder to the actor of what they brought to the read

- Vocal sample of what race / character sounds like

- Any/all artwork (of character, race, location, etc…)

- Gameplay footage, even for non-actor performers

- In-Session:

- Communicating the story

- Start with a conversation

- Show your love and passion for the game and the character

- The actor is there to build the story with you

- Keep the information simple

- Listen to the actor’s thoughts on interpreting the character and allow them the comfort and freedom to bring their own life experience to the performance

- Feedback should go to the director, and let them communicate with the actor

- Keep an open mind and listen!!

Wednesday Night

The big Wednesday night event for game audio was the Interactive Audio Special Interest Group (IASIG) party at The Thirsty Bear. This is one of the most well-attended game audio events at GDC. But this year I spontaneously decided to join a small group of game audio folks for a cheap and cheerful Thai dinner and some quiet conversation.

[/showhide]

[showhide type=”post4″ more_text=”Click here to show Thursdays Highlights” less_text=”Hide Thursday Highlights”]

Day 4 – Thursday, March 22

Talk 1 – 10:00am – 11:00am

Giving a Voice to the Machines of ‘Horizon Zero Dawn’

Sound Designer, Pinar Temiz, takes us through her process for discovering and designing the robot sounds for Guerrilla Games’, ‘Horizon Zero Dawn’.

‘Horizon Zero Dawn’ was a brand new IP for Guerrilla, requiring the entire development team to leave the comfort zone of the Killzone series they had worked on for the previous decade. The open world environment features a lush, naturalistic landscape, populated with diverse tribes and “majestic machines” that you need to fight against. And all of these things needed sound.

With that, Pinar asks the question, “How do you start designing a game for a world that doesn’t exist yet, let alone its characters?”

The sound team started with the style guides, which laid out a complex “robot ecology” – referencing acoustic ecology – that divided machines into various categories based on types of materials, animalistic versus industrial machine traits, and a number of additional identifiers.

The research phase involved looking at the materials of each machine, from the metals and servos to the more invisible ingredients that make up each robot’s character. For example, a more advanced machine might be more efficient and quieter than an old machine.

When the team entered the production phase they were given a “low-level design document” that detailed the function and capabilities of each creature, each of which had complex AI behaviours, 250+ animations and extensive VFX. This was a monumental task that went way beyond the scope of the Killzone series. The solution to make this all manageable was to use less assets per robot, but make their sounds and logic more complex.

CarouselCon

For years during GDC, game audio folks have gravitated to the carousel, next to the Moscone Center, to chill, eat some lunch, and spend some quality time with one another. Last year, Matt Marteinsson decided to organize a series of lunchtime micro-talks, allowing individuals to share nuggets of wisdom with their peers, and it was a big success. Thus, CarouselCon was born. This year’s talks drew an enthusiastic audience, and so I would say the CarouselCon is a staple of the #gameaudiogdc tradition.

Talk 2 – 2:00pm – 2:30pm

Audio Asset Management Tips and Tricks

Richard Ludlow, audio director at Hexany Audio, provided the audience with invaluable tips on how to track your assets and manage your projects, allowing you to streamline your workflow and provide a higher level of service to your clients.

Richard says that when naming assets, build from general to specific, and use shorthand for prefixes and categorization so you have consistent terms that will allow you to refine your searches.

It’s easy to track your audio assets using a spreadsheet, like Google Docs or Excel, but also consider database software as well as proprietary audio asset management tools.

Besides tracking your sound effects, music and dialogue, you should also keep track of issues and the various middleware components. For example, if you’re using Wwise you should have tabs for sound banks, states, switches, and real-time parameter controls, etc..

A number of easy-to-use task management tools, like Trello, Jira and Breeze, let you schedule project assignments for yourself and your team.

Talk 3 – 3:00pm – 3:30pm

Music In Virtual Reality

If you’ve been following Winifred Phillips’ articles on Gamasutra over the last couple of years, you’ll have noticed that she is one of the industry authorities on music for virtual reality. Using examples from a number of VR games that she’s composed for, Winifred explores when to consider 3D versus 2D music, as well as morphing diegetic music with 2D for dramatic effect. The topic of how uplifting music can help combat VIMS (Visually Induced Motion Sickness) is also addressed, using the example of the futuristic VR shooter, ‘Scraper’, where heroic music was used to convey both positive heroism and intense action.

Talk 4 – 5:30pm – 6:30pm

‘Mass Effect: Andromeda’ Audio Retrospective

Bioware Creative Audio Director, Michael Kent, details how his 14-member audio design team executed the audio for ‘Mass Effect: Andromeda’, which contains a staggering number of sound effects and dialogue assets, and almost 550 minutes of music. The team’s goal was to respect the ‘Mass Effect ‘ legacy while at the same time deliver a full-sounding and sharpened mix.

A big part of this update sound was the new weapon recordings that were done in collaboration with Warner Brothers and EA/Dice. Foley and field recordings were also updated, while new sonic palettes using granular, wavetable and modular synthesis were also developed. This resulted in a source library that was a total of 670GB.

The talk then moved into how these sounds were implemented, including an overview of the use of the FrostBite Engine systems from EA Dice, to procedural foley and sophisticated, environment-based late and early reflection systems.

Thursday Night

Thursday evening was the G.A.N.G. Awards, an annual event where the Game Audio Network Guild awards excellence in the game audio industry.

For me though, Thursday night was a chance to celebrate the Ontario, Canada game development industry, at the annual OMDC (Ontario Media Development Corporation) party. This was a chance to mix and mingle with my local peers and thank the OMDC for the work that they do, helping Ontario-based independent game developers fund their projects.

[/showhide]

[showhide type=”post5″ more_text=”Click here to show Fridays Highlights” less_text=”Hide Friday Highlights”]

DAY 5 – Friday, March 23

Talk 1 – 10:00am – 11:00am

The Heroes of ‘Star Wars Battlefront II’

EA Dice Sound Designer Philip Eriksson explains how the team created a cohesive mix that incorporated heroes from many eras of the franchise. The talk breaks down the sound design direction, challenges and systems used to deliver the sounds in the game. A number of video and sound examples were used to demonstrate how they were able to take the sound direction to a place that was more real and in-world sounding, as opposed to “gamey”. They had access to original sounds from movie stems at their disposal, but also created a lot of new content, which resulted in a hybrid approach to the sound design.

A few takeaways:

- Compiling a list of words to describe the different heroes really aided the character design

- To implement certain ambiences taken directly from the movie stems, the loops were often rendered twice, using different EQ filters. This allowed the ambiences to loop independently without repeating.

- Vocalizations were often used for the first sound effects pass

- Some techniques from the early movies were reintroduced, like no-input mixer feedback and modular synthesis

- Instead of using dedicated LFE samples, they used filters to separate LFE content and send it to its own bus.

Talk 2 – 1:30pm – 2:30pm

Thinking Outside of the Box: Working with Other Musicians to Realize Your Game Soundtrack

This roundtable discussion, hosted by That Game Company’s Vincent Diamante (thatgamecompany), and his colleagues, musicians Jeff Ball and Kristin Naigus, along with mix engineer, Kyle Johnson, brought together some practical advice on how to manage and maximize the production value of your music, by employing live musicians. The discussion started with the premise that a MIDI composition is still a performance, and you can include a live instrument to add certain imperfections that will bring a human element to your work. Many composers are now having their work realized by Eastern European based live orchestras which have become quite affordable in recent years. The advice here is to check that your orchestration is solid and that parts are easy to read, thus minimizing the chance that the music will be wrongly interpreted.

3:00pm – 4:00pm

An Interactive Sound Dystopia: Real-Time Audio Processing in ‘Nier:Automata’

In this talk Platinum Games’ Shuji Kohata explains how they used realtime audio processing to maximize the interactive potential of the audio in ‘Nier:Automata’. Before working in the games industry, Shuji Kohata designed digital guitar effects for Roland. Now he uses that expertise to offer sound designers greater creative freedom and flexibility, with tools such as spatial audio, reverb, and other effects that run in real time in the game. One standout example is the development of a spatial audio system that can deliver 3D audio using any playback system. Another is an interactive reverb system that uses raycasts whose collisions are used as reference points for distance, reverb level and filtering effects. An important component of ‘Nier:Automata’s’ game mechanic is hacking. For this, Shuji created a processor that bitcrushes the music into 8-bit ship sounds.

Further Reading:

Happy Hacking: Music Implementation in NieR:Automata

The Hands-on Sound Design of NieR:Automata

Friday Night

Friday nights at GDC are always filled with mixed emotions, We find ourselves exhausted from the early mornings, late nights and intense learning, sad as we prepare to bid farewell to old friends and new, and excited with the anticipation of putting all that we’ve learned into practice.

On this Friday night I had the good fortune of running into friends from the Game Audio Hour podcast, and joined them for a tasty meal in Chinatown, followed by a quick drink at a nearby bar. On the Friday evening of every GDC, Leonard Paul, from the School of Video Game Audio, hosts a party for game audio folks, at a restaurant near the conference. This year’s venue was the California Pizza Kitchen. Since I was with friends in Chinatown, I didn’t make it to event until later in the evening. By that time, things had mellowed quite a bit, but the conversations were still flowing.

[/showhide]

Well that’s it for GDC 2018 but if you want to catch up on anything you missed out on, GDC release a lot of slides from the talks and videos (some free, some members only), that are totally worth checking out here and I don’t know about you, but I’m already looking forward to next year.

GDC release a lot of slides from the talks and videos (some free some members only), that are totally worth checking out here

LINKS

Official

We hope you enjoyed Matthew’s review, check out others in our Reviews section. Don’t forget to sign up to our Monthly Newsletter to make sure you don’t miss out on our reviews and interviews.

We’re also running a Patreon campaign to make sure we can keep bringing you regular, high quality content if you’re feeling generous! Thanks for even sharing!

The Sound Architect